Computers have always held a special spot in my brain. One of my earliest memories is of playing this version of pinball for Windows on a machine that I think must have been running Windows XP at the time. This was around 2003, right before my family up and moved to another continent. I remember trying to play it one last time before we moved out of that house, only to find that the computer had already been packed up. Weirdly I don’t remember ever playing it again, even though I must have been a little obsessed with it seeing as I wanted it to be my last act before leaving for another landmass.

Computers have been a part of my life since the beginning, and it’s been awesome to see them go from these quirky machines that make you mad all the time, to being these quirky machines that make you mad only sometimes. Jokes aside though, I’ve learned a lot about computers in the twenty years since I first messed around with one. Computers themselves have progressed a lot too. This isn’t a tech blog, but with how prevalent these machines are it would be a disservice not to talk about them now and again. They can make or break you by riddling a story with plot holes, or filling up gaps that you’d have a terrible time bridging otherwise. Some stories can only be told with computers in the mix, stories about the future of our society, or stories about the growing digital world. Sometimes it feels like computers both solve and create all the problems in the world. A resource so rich with conflict must be tapped!

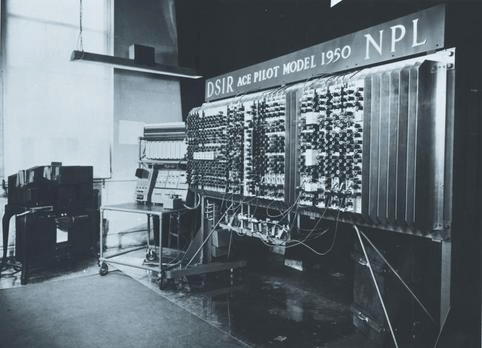

One of the most popularized episodes in the history of computing is the true story of Alan Turing. He’s not called the father of theoretical computer science for no reason. Everyone knows about his exploits during World War Two cracking Enigma, but he continued working in computers after the war two for Britain’s National Physical Laboratory (NPL) where designed this beast:

The Automatic Computing Engine Pilot Model 1950, or ACE Pilot. Which is a way cooler name than any modern computer by the way. It took advantage of something called a mercury delay line to store memory. Delay lines were used in older systems to delay the propagation of analog signals, often for radar, but in this case the mercury delay line was used to hold onto information as long as necessary. It worked by pumping an acoustic signal into the mercury, which was then held as long as need be. It could store a whopping 32 instructions in each of it’s 12 delay lines for a grand total of 384 instructions.

Around the same time, another couple of brits, Kathleen and Andrew Donald Booth, were designing the first assembly language. They released their work in a book called ‘Coding for A.R.C.’ which stood for Automatic Relay Computer. The point of an assembly language was to make easy to read instructions that could then be translated into machine code by a program referred to as an assembler. To give you some perspective, here is a set of instructions written in assembly versus a set of instructions written in machine code that both add two numbers from two registers and place the result in another register.

[ op | rs | rt | rd |shamt| funct] assembly

000000 00001 00010 00110 00000 100000 binary

In order to program the first computers before assembly language was invented, human coders would have had to write and read in these strings of binary. Obviously that wasn’t a good long term solution. Thanks to Kathleen and Andrew, we no longer have to program computers in such an unfriendly way. Not that assembly language is easy, but it paved the way for higher level languages like Fortran, Algol, COBOL, and later the now ubiquitous C programming language.

Speaking of, Dennis Ritchie and Ken Thompson are two people whose names you rarely hear outside of the programming world. I find it interesting that Alan Turing is a household name, while other pioneers are rarely mentioned by the lay person. I wonder how long it’ll be before a movie is made about these two? They certainly deserve it. Together they designed the C programming language, which is what Windows is actually written in. They also wrote the Unix operating system in C and assembly language, which Mac OS and Linux are both based on. In other words, these two created the foundations for basically all modern computing. No matter if you’re a windows apologist, an Apple shill, or a Linux enthusiast (I’m not biased), you have these two to thank for how your machine functions.

It doesn’t stop there though. Those two designed and built so many programs commonly used today that it’s hard to even list them all. And you know what’s crazy? They did all this without access to computer screens. The output of computers at the time was PRINTED ON PAPER. Every time they wanted to test something, they had to print that shit out. I cannot fathom the patience it would take to achieve anything they did.

Ken Thompson is actually still alive by the way. I’ve been talking about him in the past tense but it’s not like he ever stopped being a developer. The man is a legend, and still works in the industry at Google as of 2024. He recently designed a new programming language along side Rob Pike and Rober Griesemer called Go.

Imagine inventing a language in the 1960s that’s not only still in use, but also the most optimal solution in many cases, and then attempting to one up yourself more than sixty years later. It’s hard to wrap your head around.

These are just a few of the many thousands of amazing people in the world of computers. Frankly the whole industry is a bit of an untapped well for writers like myself. So many amazing stories are out there to dig up, but not that many of them have had their chance in the spotlight. I’d be happy to see scriptwriters and novelists picking up these stories and giving them the artistic license they truly deserve. But hey, maybe one of these days I’ll see a movie poster with Matt Damon and Ben Affleck in front of a computer or something and I’ll know everything is right in the world.

Thank you for reading,

Benjamin Hawley